NVIDIA has announced a big step forward in AI hardware at Computex 2025 in Taipei. The company is making its advanced chip-connecting technology, NVLink Fusion. It is available to other chip designers for the first time to speed Ai chip communication. This move focus to help companies build powerful custom AI systems by allowing multiple chips to work together more efficiently and exchange data much faster. It is an important requirement as AI models become larger and more complex.

Marvell Technology and MediaTek, two leading chipmakers, have already signed on to use NVLink Fusion in their upcoming products. NVLink itself isn’t new, NVIDIA has used it for years to link together its top chips, like the GB200. It combines two Blackwell GPUs with a Grace CPU. But now, by opening NVLink Fusion to the wider industry, NVIDIA is making it possible for others to create their own high-performance AI hardware. It is not just to depend on NVIDIA’s own systems.

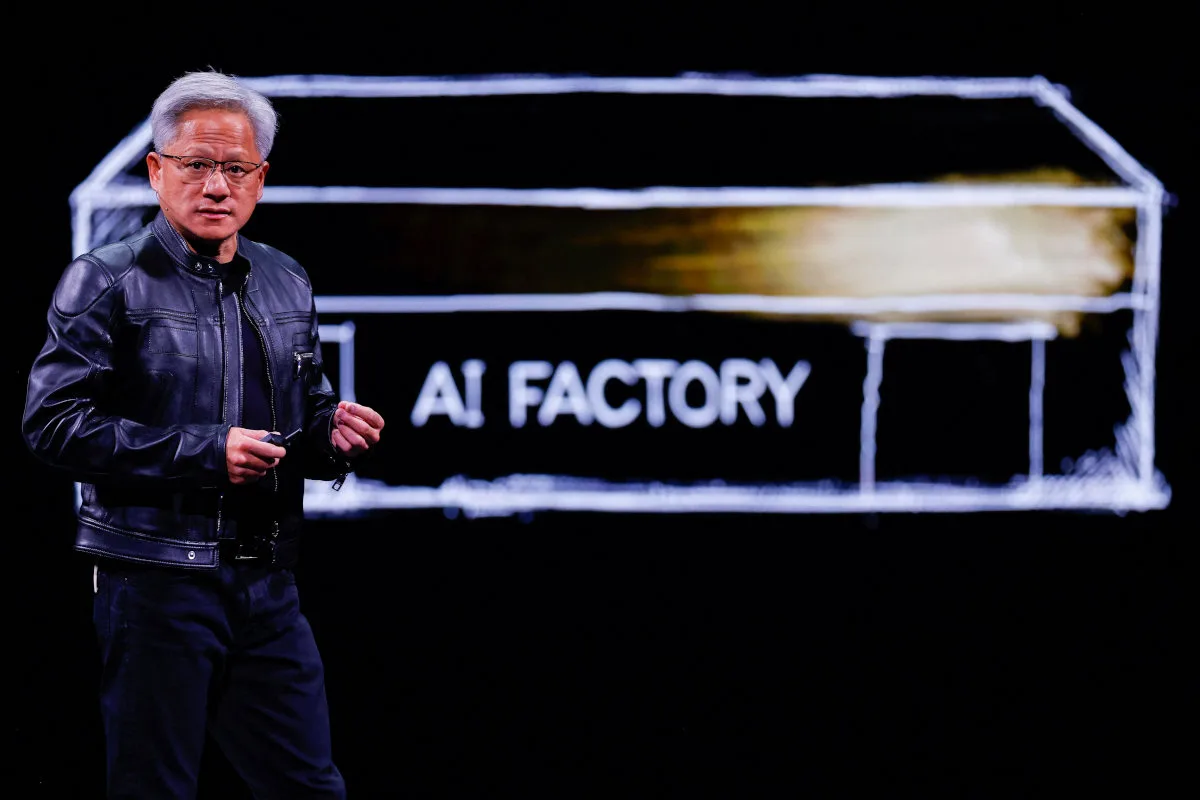

During his keynote, NVIDIA CEO Jensen Huang highlighted how the company has evolved. Once, most of his presentations focused on gaming graphics cards. But now NVIDIA is at the heart of the AI revolution, powering everything from cloud computing to robotics. Since the launch of ChatGPT in 2022, NVIDIA’s chips have become important for training and running large AI models. Also the company has expanded into designing CPUs that can run Microsoft Windows using technology from Arm Holdings.

Huang also introduced several new NVIDIA products:

- Blackwell Ultra: A next-generation AI chip coming later this year, providing even more performance for demanding AI tasks.

- Rubin and Feynman processors: Future chips planned for release through 2028, promising further leaps in AI computing power.

- DGX Spark: A desktop AI supercomputer designed for researchers, which will soon be available. This compact system brings data-center-level AI power right to the desktop. It supports massive AI models with up to 200 billion parameters and featuring NVIDIA’s full AI software stack.

This year’s Computex is particularly big as it is the first big tech event in Asia since global trade tensions rose, underlining how important companies like NVIDIA are to the future of technology and supply chains.

Other articles you may find interesting

Summary

- The DGX Spark and DGX Station are part of NVIDIA’s push to make AI supercomputing more accessible, with the DGX Station offering up to 20 petaflops of AI performance and 784 GB of memory for even larger-scale AI projects.

- NVIDIA’s new chip roadmap includes the Vera Rubin GPU (expected in 2026), which will be able to handle up to 50 petaflops of AI inference, and the Rubin Ultra (2027), which combines four GPUs for up to 100 petaflops. The Feynman chips, arriving in 2028, will push these capabilities even further.

- NVIDIA’s collaborations with major PC makers like ASUS, Dell, HP, and Lenovo mean that these advanced AI systems will be widely available to researchers and developers worldwide.

In summary, NVIDIA’s latest announcements at Computex 2025 mark a new era where the company is not just building powerful chips for itself, but enabling the whole industry to accelerate AI development by sharing its advanced technologies. NVIDIA plans for selling tech to speed Ai chip communication is really a good and important step in the field of tech.